In the evolving landscape of music production, the collaboration between artificial intelligence and human creativity has opened new frontiers. AI-generated melodies are no longer a futuristic concept but a present-day tool, offering composers and producers a wealth of raw material. However, the true artistry lies in the human touch—the nuanced refinement that transforms algorithmic output into emotionally resonant music. This process, often referred to as human polishing of AI melodies, is where technology meets soul, and where the cold precision of machines is warmed by the intuition and experience of musicians.

The initial output from AI melody generators can be impressive in its complexity and novelty. These systems, trained on vast datasets of musical compositions, can produce sequences that are technically sound and structurally coherent. Yet, they often lack the subtle imperfections and emotional depth that characterize human-composed music. This is where the human editor steps in, listening not just for correctness but for feeling. The goal is to infuse the melody with a sense of purpose and narrative, ensuring it connects with listeners on a deeper level.

One of the primary techniques in human polishing involves dynamic shaping. AI-generated melodies might adhere too strictly to rhythmic or harmonic patterns, resulting in a mechanical feel. Human editors introduce variations in timing, velocity, and articulation—micro-adjustments that breathe life into the notes. For instance, a slight delay on a key note can build anticipation, while a subtle accent can emphasize emotional highlights. These changes, though small, transform a sequence of pitches into a compelling musical phrase.

Another critical aspect is harmonic context. AI systems may generate melodies that are harmonically functional but lack color or tension. Human musicians excel at reharmonizing or adjusting chord progressions to enhance the melodic line. They might alter a chord voicing, add a suspension, or introduce a modulation to create moments of surprise or resolution. This layer of harmonic richness is often beyond the current capabilities of AI, relying on cultural and emotional cues that humans intuitively understand.

Orchestration and timbre play a significant role in the polishing process. A melody generated by AI might be neutral in terms of instrumentation, but humans assign it to specific instruments based on character and context. The same sequence of notes can evoke entirely different emotions when played by a violin versus a synthesizer. Editors consider the timbral qualities—attack, decay, brightness, warmth—and how they interact with the melody's contour. This choices turn abstract patterns into vivid sonic experiences.

Human editors also focus on structural coherence. While AI can generate sections like verses and choruses, it may not grasp the overarching narrative of a piece. Musicians refine transitions, build dynamics, and ensure that the melody evolves in a way that feels intentional and satisfying. They might extend a phrase for dramatic effect or truncate another to maintain momentum. This big-picture thinking is essential for creating music that feels whole and purposeful.

Moreover, emotional intentionality is a uniquely human contribution. AI lacks subjective experience—it doesn't know what it feels like to be joyful, heartbroken, or nostalgic. Human editors imbue melodies with these emotions by drawing on personal and cultural contexts. They might slow the tempo for a melancholic feel or introduce a playful rhythm for lightness. These decisions are informed by empathy and artistic vision, elements that machines cannot replicate.

The collaboration between AI and human is not a replacement but an augmentation. AI serves as a boundless source of inspiration, generating ideas that might not occur to a human mind constrained by habit. The human, in turn, acts as a curator and alchemist, transforming these ideas into art. This synergy allows for unprecedented creative exploration, where artists can experiment with more ideas in less time, focusing their energy on refinement rather than generation.

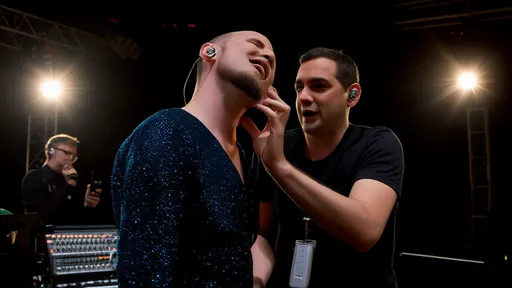

In practice, many producers now integrate AI tools into their workflows seamlessly. They might generate hundreds of melodies quickly, selecting the most promising ones for development. The polishing phase then becomes a deeply engaging process, akin to sculpting raw marble into a refined statue. Each adjustment—a note bent, a rhythm shifted, a harmony deepened—is a step toward a more expressive and authentic piece of music.

As this practice grows, it challenges traditional notions of authorship and creativity. Is the artist the one who generates the idea or the one who shapes it? In reality, both roles are vital. The AI provides the spark, but the human fans it into flame. This partnership reflects a broader trend in technology, where tools enhance human capability rather than diminish it. In music, it leads to a new era of co-creation, blurring the lines between organic and synthetic artistry.

Looking ahead, the techniques for human polishing of AI melodies will continue to evolve. As AI systems become more advanced, they may learn to incorporate some of these nuances independently. However, the human touch will likely remain irreplaceable for its depth of feeling and cultural specificity. The future of music may lie in this balanced dialogue—between the infinite possibilities of machine generation and the discerning wisdom of human refinement.

Ultimately, the art of polishing AI-generated melodies is about finding the soul in the machine. It requires technical skill, yes, but also intuition, empathy, and a keen ear for what moves us. In this process, musicians are not just editors but interpreters, translating the language of algorithms into the universal language of emotion. The result is music that honors both innovation and tradition, offering listeners something truly unique: a harmony of human and machine.

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025

By /Aug 22, 2025